Have you ever wondered how modern applications like Netflix, Spotify, or YouTube run seamlessly for millions of users across the globe? The secret lies in containers and an intelligent system called Kubernetes that orchestrates them at scale.

In this blog, we’ll break down these concepts step by step , starting from what containers are, why they became essential, and how Kubernetes helps manage them efficiently in production environments.

What Are Containers?

Think of an application that needs to run reliably on different machines — say your local laptop, a testing server, or a cloud instance.

In the past, this was usually done using virtual machines (VMs). VMs provided good isolation between applications but came with significant drawbacks — they consumed more memory, took longer to start, and duplicated operating system resources for each instance.

Then came containers, offering a more efficient way to run applications.

Containers don’t carry a full operating system inside; instead, they share the underlying OS kernel while keeping applications isolated from each other. This makes them lightweight, faster to start, and more portable than virtual machines.

Each container bundles everything the application needs from source code and runtime dependencies to configuration files into a single, consistent package.

Because of this, your app runs the same way no matter where it’s deployed on-premises, in the cloud, or on a developer’s laptop.

How Containers Work: The Layer Cake Model

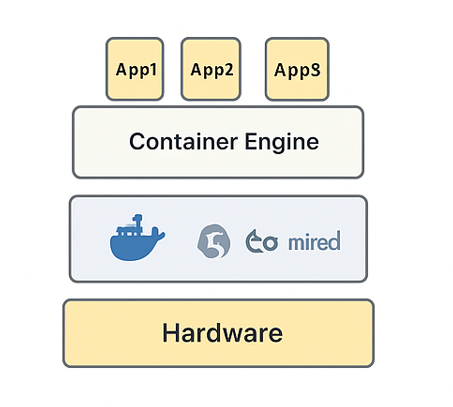

Picture the entire container ecosystem as a series of building blocks stacked on top of each other each layer adds a specific capability that makes applications run smoothly and efficiently.

- Infrastructure Layer: This is where everything begins — the actual machines that power your workloads. It could be physical servers in a datacenter or virtual machines hosted on cloud platforms such as AWS, Azure, or Google Cloud.

- Operating System Layer: Installed on that hardware is the host operating system, like Linux or Windows, which provides the kernel and core system services that containers rely on.

- Container Runtime Layer: Above the OS sits the runtime — tools such as Docker, Podman, or containerd. These manage how containers are created, started, and connected to the system.

- Application Layer (Containers): Finally, the actual containers sit on top. Each container holds a specific application along with its required libraries and configurations, completely isolated from other containers.

Because containers reuse the same host operating system kernel, they start almost instantly and use far less CPU and memory than traditional virtual machines. You can deploy several containers on one host, each running a different microservice, without the overhead of multiple full operating systems.

This lightweight approach is one of the key reasons containers have become the foundation of modern application deployment.

Why Containers Are Game-Changing

- Lightweight: Containers behave like OS-level processes, not full VMs.

- Fast: They start in seconds and can be scaled up or down rapidly.

- Portable: They run consistently across environments, “build once, run anywhere.”

- Efficient: You can host multiple containerized applications on the same host machine without wasting resources.

However, as your application grows and you start running hundreds or thousands of containers, managing them manually becomes complex.

The Challenge: Managing Containers at Scale

Let’s say your web app is deployed in 50 containers across multiple servers. What happens if one container crashes?

You would have to manually identify the failed container and restart it using a command like:

docker run <image_name>

Now, imagine doing this for hundreds of containers; it becomes nearly impossible to manage manually. You would also need to balance network traffic, monitor container health, and handle scaling during peak loads.

This is exactly where Kubernetes comes to the rescue.

Kubernetes: The Container Orchestrator

Think of Kubernetes as a traffic controller for your containers. It manages and automates everything from deployment to scaling and recovery.

Kubernetes runs containers across multiple machines (called nodes) and ensures that your application always stays healthy and available.

Here’s what Kubernetes does for you:

- Automates deployments and scaling of containers.

- Monitors container health and restarts them automatically if they fail.

- Balances traffic between running instances for optimal performance.

- Ensures high availability by spreading workloads across multiple nodes.

In short, Kubernetes makes running containers at scale easy, reliable, and efficient.

Pods: The Core Units of Kubernetes

When working with Kubernetes, it’s easy to assume that containers are the smallest unit it manages, but that’s not quite true.

Kubernetes actually operates with something called a Pod, which serves as the execution unit within a cluster.

A Pod is like a small workspace where one or more containers run side by side, sharing the same environment.

Inside this workspace, containers can:

- Communicate directly using the same network interface and IP address.

- Access shared storage volumes for reading or writing data.

Kubernetes doesn’t monitor individual containers; it focuses on the Pod as a whole.

Whenever you deploy an application, Kubernetes launches one or more Pods, each containing your containerised workloads.

If a Pod stops working or gets deleted, Kubernetes automatically brings up a fresh one to keep the application running smoothly.

This self-healing capability ensures that your services stay online without you having to restart anything manually.

Docker vs Kubernetes — What’s the Difference?

In simple terms:

- Docker is great for running containers on a single machine.

- Kubernetes shines when you have hundreds or thousands of containers running across multiple systems.

Why Kubernetes Matters

With Kubernetes, modern applications can:

- Scale automatically based on traffic.

- Recover instantly from failures.

- Deploy updates seamlessly without downtime.

- Run efficiently across hybrid and multi-cloud environments.

It has become the industry standard for container orchestration, used by companies like Google, Red Hat, and Spotify to power their large-scale production systems.

Conclusion

Containers revolutionised the way we build and deploy applications, but Kubernetes took it a step further by making them manageable at scale.

In this post, you learned:

- What containers are and how they work.

- Why managing containers manually is difficult.

- How Kubernetes solves these challenges efficiently.

In the next blog, we’ll take a deep dive into Kubernetes architecture, exploring nodes, control plane components, and how they interact to keep your applications running smoothly.

If you found this guide helpful, don’t forget to share it and subscribe to stay updated with more DevOps and Kubernetes tutorials.