Service discovery is crucial in Kubernetes. With it, microservices can communicate effectively. Ensuring reliable service discovery is essential for maintaining a stable and responsive environment.

Service Discovery in Kubernetes:

Service discovery in Kubernetes is a critical feature that enables pods to communicate with each other within the cluster. In Kubernetes, services abstract a group of pods, making them accessible via a single DNS name or IP address. This abstraction allows applications to communicate without needing to know the specifics of each pod’s location or IP address.

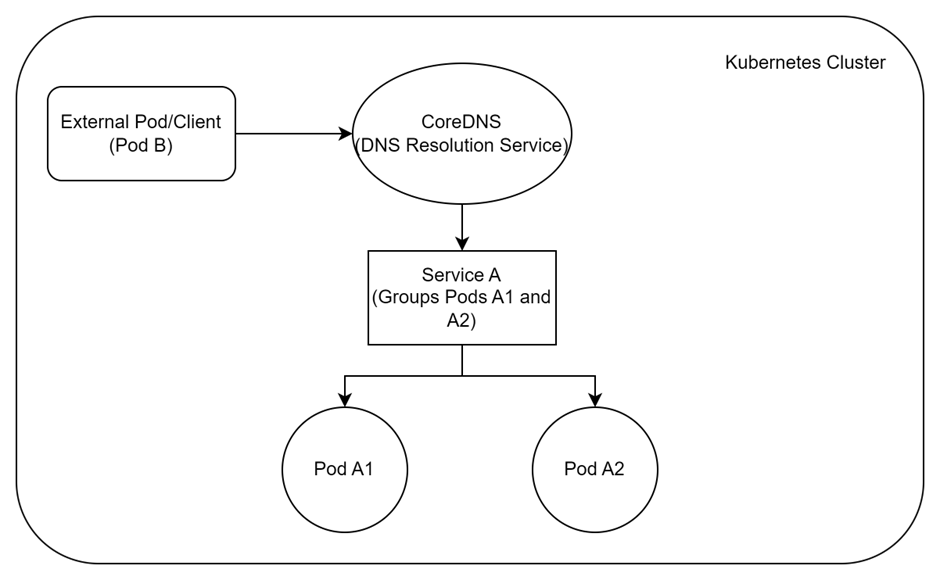

When a service is created, Kubernetes automatically assigns a stable IP address (called the ClusterIP) and a DNS name. These services are registered with the cluster’s DNS, typically managed by CoreDNS. When a pod needs to communicate with another service, it requests the service’s DNS name. CoreDNS resolves this DNS name to the service’s ClusterIP, which then forwards the request to one of the pods behind the service.

There are two main types of service discovery in Kubernetes:

DNS-based Service Discovery:

- When a service is created, Kubernetes automatically assigns it a DNS name.

- Pods can access the service using this DNS name, which CoreDNS resolves to the service’s ClusterIP.

Environment Variable-based Service Discovery:

- Kubernetes also injects environment variables into each pod that contains the service’s IP address and port information.

- This method is less flexible but can be useful in some cases.

Explanation:

- External Pod/Client (Pod B): Requests the service.

- CoreDNS: Resolves the service name to a ClusterIP.

- Service A: Acts as a load balancer and directs the request to one of the Pods (A1 or A2).

- Pods A1 and A2: The actual instances handling the request.

For a deeper understanding of CoreDNS, refer to the official documentation: Using CoreDNS for Service Discovery

Common Issues

DNS Resolution Problems

DNS resolution issues are common in Kubernetes clusters. These problems can lead to failed service discovery, where services cannot find each other by their names. Diagnosing and fixing these issues requires checking the CoreDNS logs and testing DNS queries.

Example: Diagnosing DNS Resolution Issues

Identify the coredns pod first and check the logs for the same.

root@rke2-server1:~# kubectl get pod -n kube-system | grep dns

root@rke2-server1:~# kubectl logs -n kube-system rke2-coredns-rke2-coredns-84f49dccc9-kj2hsThis command retrieves the logs from CoreDNS pods. Errors such as SERVFAIL or NXDOMAIN may indicate DNS resolution problems.

Fix: If errors are found, review the CoreDNS configuration. A common fix is to restart the CoreDNS pods to clear any temporary issues

root@rke2-server1:~# kubectl rollout restart deployment coredns -n kube-systemThis command restarts the CoreDNS deployment, which often resolves DNS-related issues.

Service Connectivity Issues

Service connectivity issues occur when services fail to communicate despite being correctly defined. This can happen due to misconfigured service selectors or endpoints.

Example: Testing Service Connectivity

root@rke2-server1:~# kubectl exec -it <pod-name> -- ping <service-name>Replace <pod-name> with the name of a running pod and <service-name> with the service you want to reach. If the ping command fails, the service may not be correctly defined or reachable.

Fix: Check the service and pod definitions. Ensure that the service selector matches the pod labels.

root@rke2-server1:~# kubectl get service <service-name> -o yaml

root@rke2-server1:~# kubectl get pod --show-labelsThese commands help verify the correct association between services and pods.

Network Policies

Network policies control the traffic between pods and services. Misconfigured network policies can block service discovery, leading to communication issues.

Example: Describing Network Policies

root@rke2-server1:~# kubectl describe networkpolicy <policy-name> -n <namespace>This command provides details about the network policy rules in place. Any unintended restrictions should be identified.

Fix: To debug, temporarily allow all traffic by adjusting the network policy, then gradually tighten the rules while testing service communication.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-all

namespace: <namespace>

spec:

podSelector: {}

ingress:

- {}

egress:

- {}

policyTypes:

- Ingress

- EgressThis YAML manifest allows all traffic to and from the pods in the specified namespace. Implementing it can help identify whether a network policy is the cause of the issue.

Additional Tools and Techniques

kubectl Commands

Leverage kubectl commands for a deeper insight into your cluster’s state. Commands like kubectl describe and kubectl get can provide detailed information about services, pods, and network policies. Use these tools to gather information and diagnose issues effectively.

Monitoring and Alerts

Implement monitoring and alerting solutions for your cluster to keep track of service health and performance. Here, tools like Prometheus and Grafana can provide visual metrics and further set up alerts for any issue related to service discovery.

Conclusion

Effective service discovery is vital for the smooth operation of Kubernetes clusters. By addressing DNS resolution issues, service connectivity problems, and network policy constraints, you can ensure that services find and communicate with each other seamlessly.

Regularly monitoring CoreDNS logs and reviewing network policies are essential practices for maintaining a robust service discovery mechanism. Additionally, utilizing tools like kubectl, implementing comprehensive monitoring solutions, and performing routine tests will help you identify and resolve issues promptly.

By applying these techniques and best practices, you can enhance the reliability and performance of your Kubernetes environment and ensuring that your applications remain accessible.

Thank you for reading! If you have any questions or need assistance with Kubernetes service discovery, feel free to reach out or leave a comment.

For more detailed troubleshooting tips on Kubernetes pods, check out my blog Troubleshooting Pod Failures in Kubernetes